目前有两种方法:

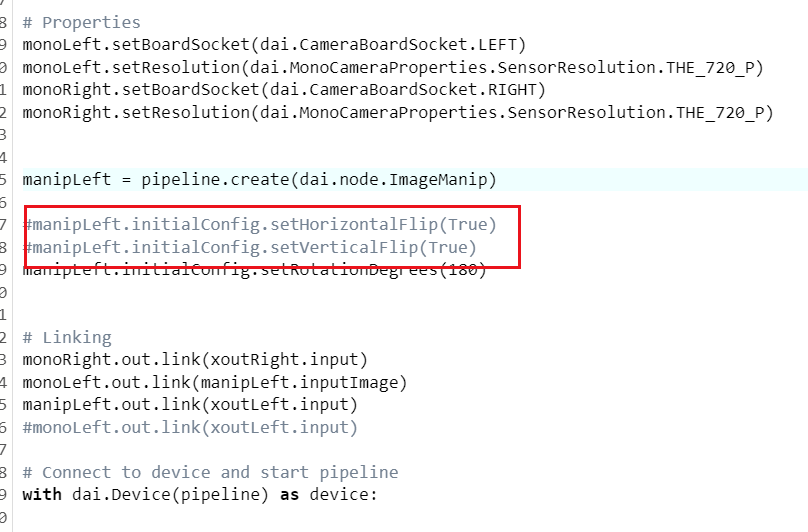

1.用ImageManip

#!/usr/bin/env python3

import cv2

import depthai as dai

# Create pipeline

pipeline = dai.Pipeline()

# Define sources and outputs

monoLeft = pipeline.create(dai.node.MonoCamera)

monoRight = pipeline.create(dai.node.MonoCamera)

xoutLeft = pipeline.create(dai.node.XLinkOut)

xoutRight = pipeline.create(dai.node.XLinkOut)

xoutLeft.setStreamName('left')

xoutRight.setStreamName('right')

# Properties

monoLeft.setBoardSocket(dai.CameraBoardSocket.LEFT)

monoLeft.setResolution(dai.MonoCameraProperties.SensorResolution.THE_720_P)

monoRight.setBoardSocket(dai.CameraBoardSocket.RIGHT)

monoRight.setResolution(dai.MonoCameraProperties.SensorResolution.THE_720_P)

manipLeft = pipeline.create(dai.node.ImageManip)

#manipLeft.initialConfig.setHorizontalFlip(True)

#manipLeft.initialConfig.setVerticalFlip(True)

manipLeft.initialConfig.setRotationDegrees(180)

# Linking

monoRight.out.link(xoutRight.input)

monoLeft.out.link(manipLeft.inputImage)

manipLeft.out.link(xoutLeft.input)

#monoLeft.out.link(xoutLeft.input)

# Connect to device and start pipeline

with dai.Device(pipeline) as device:

# Output queues will be used to get the grayscale frames from the outputs defined above

qLeft = device.getOutputQueue(name="left", maxSize=4, blocking=False)

qRight = device.getOutputQueue(name="right", maxSize=4, blocking=False)

while True:

# Instead of get (blocking), we use tryGet (non-blocking) which will return the available data or None otherwise

inLeft = qLeft.tryGet()

inRight = qRight.tryGet()

if inLeft is not None:

cv2.imshow("left", inLeft.getCvFrame())

if inRight is not None:

cv2.imshow("right", inRight.getCvFrame())

if cv2.waitKey(1) == ord('q'):

break

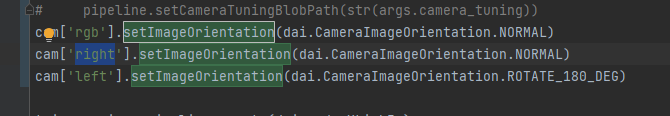

2.setImageOrientation